OIT has built a dedicated high-performance network infrastructure that can help meet the needs of researchers requiring the transfer of a large quantity of data within and beyond campus. This network is called UCI Lightpath which is funded by a Grant from National Science Foundation Campus Cyberinfrastructure – Network Infrastructure Engineering Program (NSF CC-NIE)

OIT has built a dedicated high-performance network infrastructure that can help meet the needs of researchers requiring the transfer of a large quantity of data within and beyond campus. This network is called UCI Lightpath which is funded by a Grant from National Science Foundation Campus Cyberinfrastructure – Network Infrastructure Engineering Program (NSF CC-NIE)

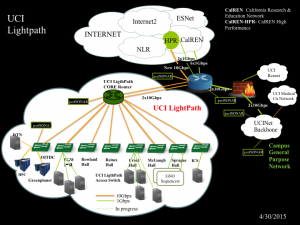

UCI Lightpath is composed of a Science DMZ with a 10 Gbps connection to the science and research community on the Internet, and a dedicated 10 Gbps network infrastructure on campus. A science DMZ is a portion of the network that is designed so that the equipment, configuration, and security policies are optimized for high-performance scientific applications rather than for general-purpose business systems or “enterprise” computing.

The initial infrastructure covers eight campus locations including the OIT Data Center where computing clusters, such as HPC and Greenplanet reside. The UCI Lightpath network infrastructure is separate from the existing campus network (UCINet.) The diagram shows the current status of the UCI Lightpath.

For more information of UCI Lightpath and its access policy, please refer to OIT website http://www.oit.uci.edu/network/lightpath/